Welcome to part 2 of our three-part series on building agent-ready backends. If you missed part 1, Why REST APIs Aren’t Built for Agentic Workflows: Introducing the Model Context Protocol (MCP), we explored why traditional REST APIs fall short for autonomous agents - and how the Model Context Protocol (MCP) introduces a new framework for powering machine-to-machine interactions.

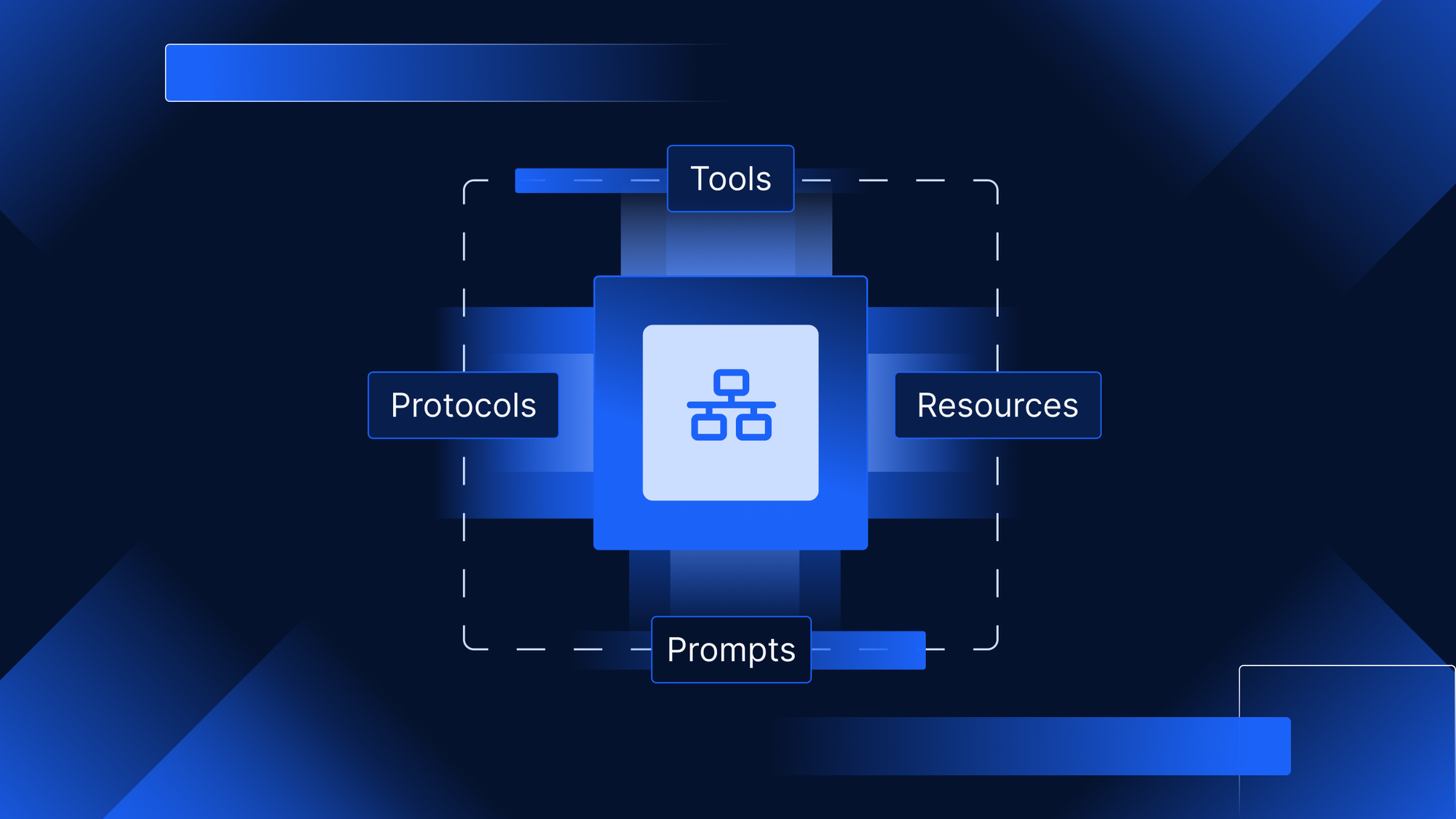

In this post, we’ll go deeper into the MCP architecture itself: how agents and MCP Servers communicate, the key components like Tools, Prompts, and Resources, and the transport protocols (like JSON-RPC and SSE) that make it all work.

We’ll also show you why remote MCP Server architecture is key for enabling distributed agent networks and collaborative, cross-agent workflows.

In part 3, Building Agent-Ready Systems with Xano: Powering the Future of M2M and A2A Workflows with MCP, we’ll shift to show you how to build an MCP-compliant backend using visual development in Xano.

Understanding the MCP Architecture for Agent-to-Agent Communication

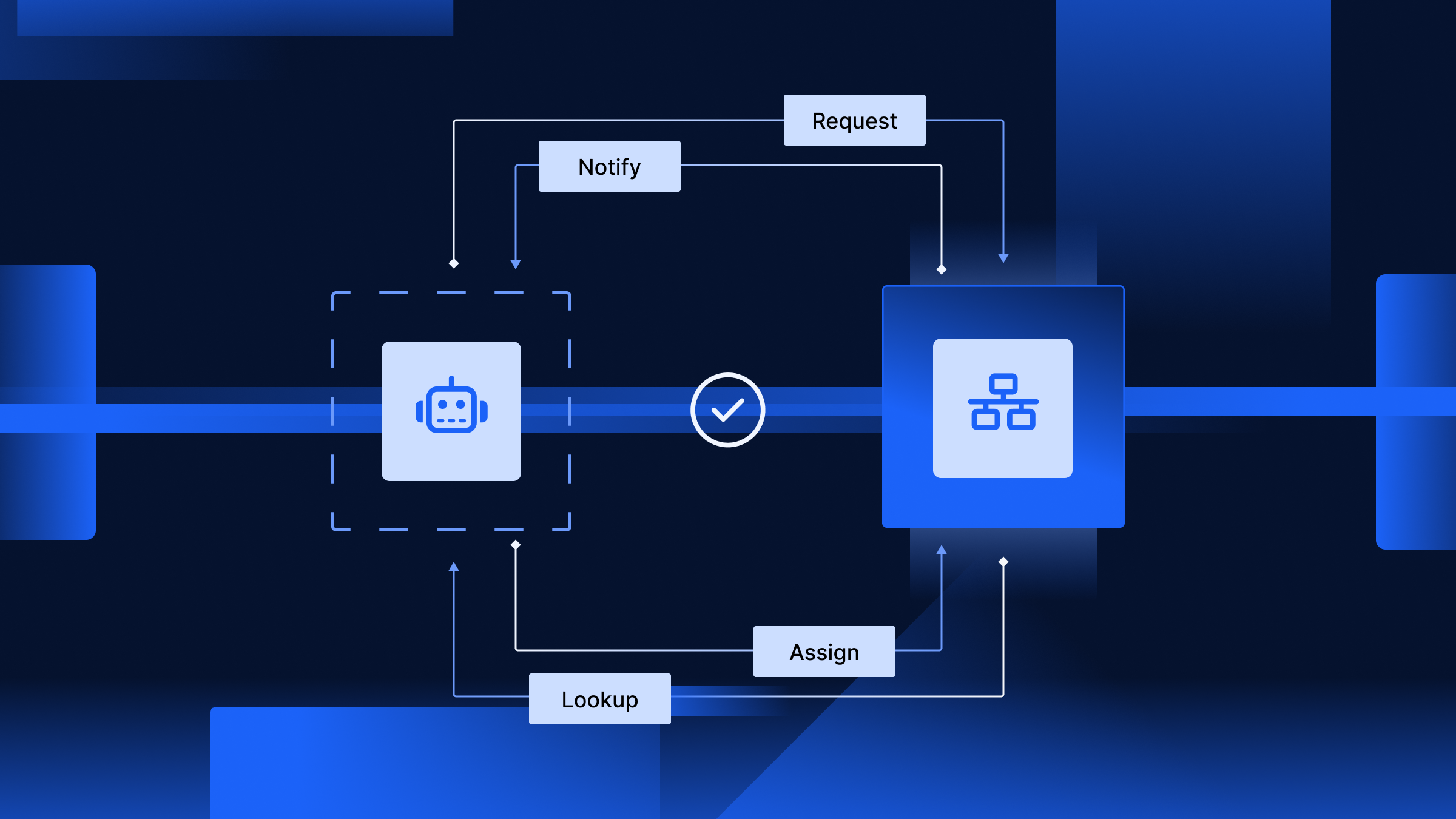

The Model Context Protocol (MCP) introduces a standard interface between MCP Clients (typically AI agents or orchestrators) and MCP Servers (services that expose callable tools). The goal is to allow agents to dynamically discover, understand, and invoke external capabilities without needing custom integrations or tightly coupled logic.

At the heart of MCP is the Client–Server model:

- The MCP Server responds by listing available tools and executing the requested tool with well-defined input/output formats.

The MCP Client initiates communication, asking:

“What tools do you offer?” then “Here’s the input for this tool - run it and return the result.”

This structure forms the backbone of a scalable and modular MCP Server architecture, built for interoperability across AI systems.

This model is intentionally flexible: clients can be part of an AI agent, a system orchestrator, or another server. Servers can be embedded within an agent's runtime - or more powerfully, hosted as remote services.

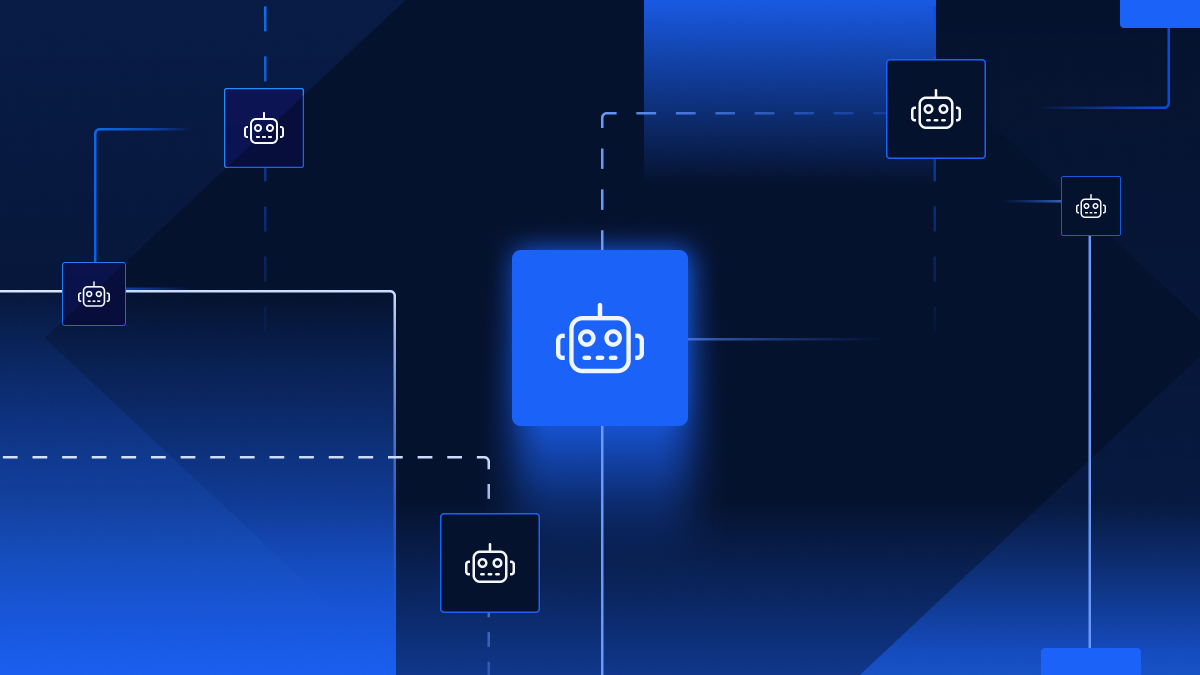

Why Remote MCP Servers Matter

While it’s possible to co-locate a server inside the same environment as an agent (i.e., client-installed), this limits the agent to its own local capabilities. Remote MCP Servers unlock far more powerful behavior, enabling robust MCP Server architecture for distributed agents.

- Cross-agent collaboration

Agents can call tools hosted by other agents or systems, allowing shared execution and knowledge reuse. - Tool ecosystems

A remote server can expose a tool once and have it consumed by many agents or clients. - Separation of concerns

Clients remain lightweight, while business logic lives and scales independently on the server side. - True networked workflows

Agents orchestrate tasks across multiple domains, connecting toolchains that live across companies, platforms, or cloud regions.

This is the foundation for interoperable, distributed agent networks.

Transport & Messaging Protocols

MCP is built on top of widely adopted web standards:

- HTTP

All interactions use standard web requests for compatibility and scalability. - JSON-RPC 2.0

A structured, machine-readable format that ensures consistent method calling and response handling. Every tool call uses JSON-RPC to encapsulate the action and its parameters. - Server-Sent Events (SSE)

For tools that need to stream results or maintain session state, MCP supports long-lived interactions using SSE. This enables agents to receive data over time or manage stateful workflows.

MCP Core Terms

MCP introduces a consistent vocabulary and structure for agents and tools to interact. These components are designed to be machine-readable and agent-friendly:

Tools

The fundamental unit of execution in MCP. Each tool has:

- A unique name and description

- A defined input schema

- A defined output schema

- An optional natural language prompt to guide usage

Agents invoke tools to perform specific tasks - like scheduling a meeting, generating a chart, or querying a dataset.

Resources

Structured, referenced data that can support tool behavior.

Examples:

- Lists of available options

- Preloaded data for agents to use in reasoning or decision-making

Resources can be embedded in tool definitions or exposed separately.

Prompts

Prompts in the Model Context Protocol (MCP) define structured, reusable instructions intended to guide language model reasoning within agent workflows. Rather than triggering a specific action like a Tool, a Prompt provides context or direction for how an LLM-based agent should interpret or respond to a task.

Prompts are especially useful when an agent needs to:

- Interpret the results of a Tool or Resource

- Reframe structured data into natural language

- Make a decision based on dynamic input

- Maintain consistency in how certain tasks are handled across systems

Example Use Case

An AI agent might call a Tool that returns structured analytics data, then use an associated Prompt to summarize or explain the data using an LLM. The Prompt ensures consistent phrasing, expectations, and behavior - even as the underlying inputs vary.

Prompts are essential in multi-agent workflows where agents reason, communicate, or delegate based on LLM-driven interpretation.

Learn how to create agent-ready workflows using Xano’s visual development tools and bring your MCP Server to life.

Continue to part 3, Building Agent-Ready Systems with Xano: Powering the Future of M2M and A2A Workflows with MCP, and explore how Xano helps you build agent-ready workflows.